Evolution is wasteful - many failures are needed to explore a problem space. After multiple rounds of experimentation and recombination effective and novel solutions may emerge, but few companies have the time and resources to indulge in such a wasteful process.

Evolution, in its classic Darwinian form, is merely an effective way to develop a barely-good-enough solution. Rather than a wasteful Darwinian exploration of a problem space, it is cheaper and more effective to guide the process.

A typical Agile approach may divide a six-month initiative into 26 week long iterations, creating multiple generations, or experiments, each building on the lessons learned from the preceding trial. Numerous opportunities for discovery and adaptation are produced in this way. But we cannot trust that providence alone will guide us efficiently to the best solution. We need to understand what evolution is good at and how best to harness it if we are to make the best use of our scarce resources.

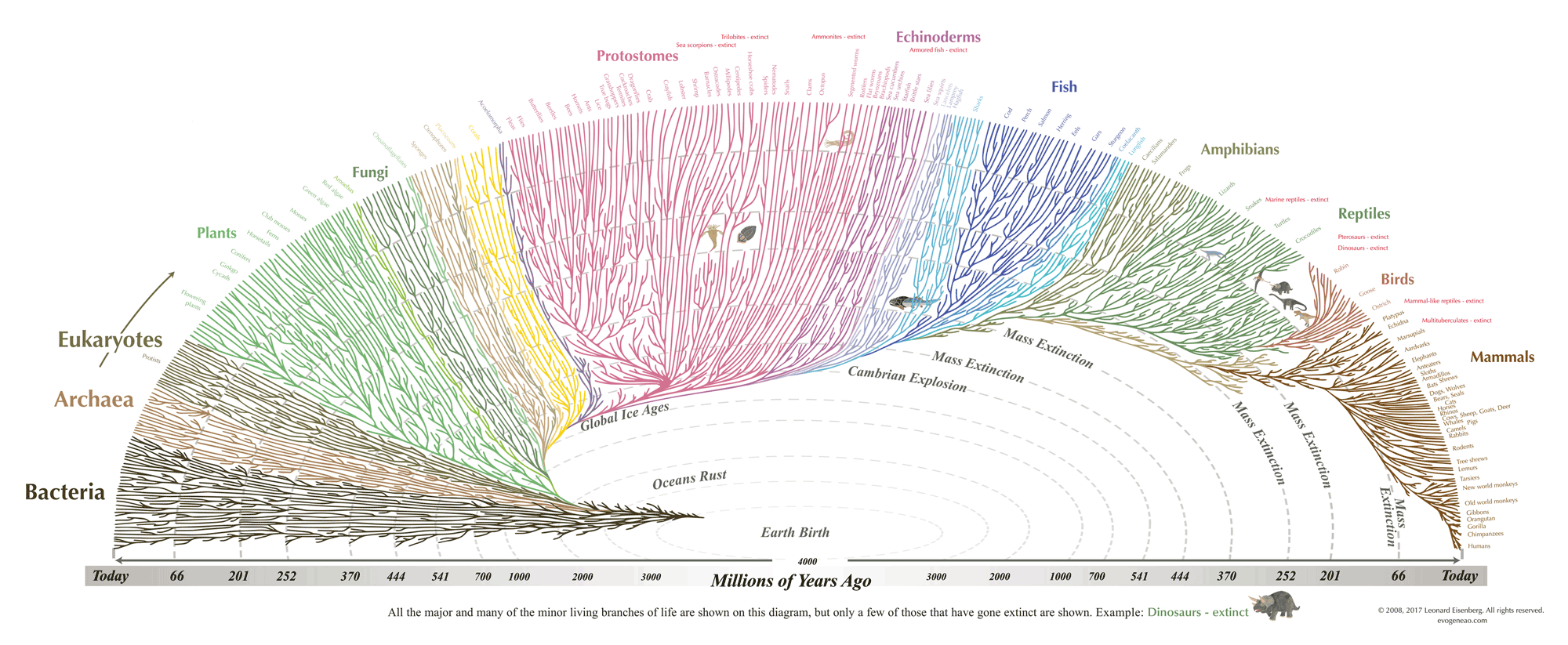

Life on earth first appeared soon after the planet cooled around 4 billion years ago. Multi-cellular animals didn't arise until 600 million years ago. For about 3 billion years evolution busied itself with bootstrapping the basic mechanisms of the cell. It took another 200 million years for animals with backbones to get out of the oceans. All the terrestrial backboned animals that have ever existed have lived in the last 10% of life's history on earth. Evolution takes a long time to bootstrap.

Using a purely evolutionary approach, we are in danger of wasting too much time bootstrapping features that have been developed many times before. To avoid this, we should start with a rough design comprising a mashup of a few well-proven pre-existing core components. We should reserve Evolution for what will become the unique differentiators of the new solution, not its basic features. We should focus on evolving those features we don't understand, not those we do. By maximizing the use of open-source, or commercial products, for core components, we can obtain basic capabilities quickly without waste. All software is built from pre-existing software. Every solution developed today benefits from over 60 years of preceding software development. We must acknowledge this and use it to our advantage.

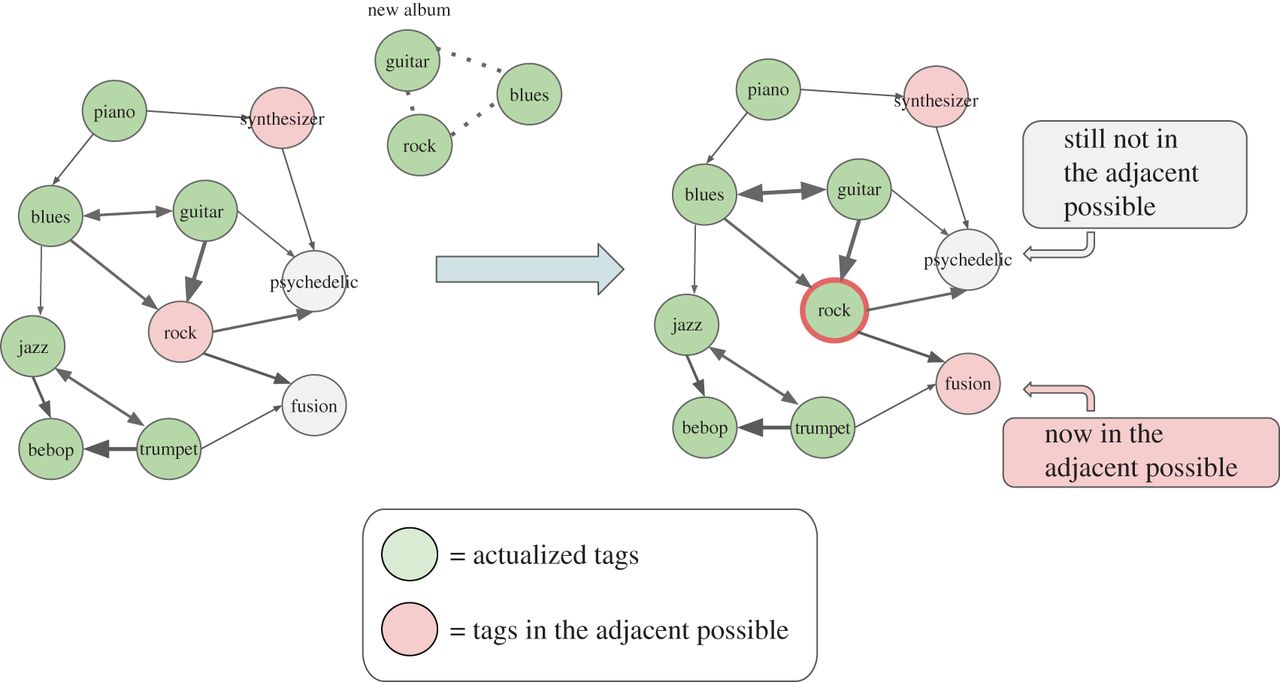

Knowing where to draw the line between reusing existing components and building new components requires an honest assessment of the market requirement for novelty. The requirements must be challenged to identify the real need for innovation. Many compelling solutions have been built by combining a handful of existing components in a novel way. In fact many, possibly most, so-called "innovations" are actually just novel combinations of pre-existing components and ideas.

Stuart Kauffmann introduced the idea of the Adjacent Possible to describe the available opportunities for evolutionary innovation. Evolution innovates by advancing into new territory from the vantage point created by combining existing solutions. It is only by combining a few tested capabilities at the edge of known solution space that it is possible to reach something new. Subsequent mathematical analysis has shown that this is indeed the way innovation proceeds. We should turn this finding into a guiding principle and continuously seek vantage-points, created by combining existing solutions, from which we can launch our efforts to reach the adjacent possible.

The iterative structure of agile projects provides two significant benefits. First, it allows us to manage risk by assessing our exposure and adopting mitigating strategies at the start of each iteration. Second, it provides opportunities to obtain feedback from real users, or their proxies, at the end of each iteration and use it to improve the solution going forward. While this iterative approach allows us to adjust our solution as we proceed, it also places limits on how adaptive we can be. By understanding the nature of these limits, we can maximize the available benefits.

To adapt, or evolve, we must experiment and then eliminate failed solutions so better solutions can emerge. This approach means we will not progress at a steady rate. There will be setbacks; some iterations will disprove our hypotheses. We must understand that our tolerance for failure and our desire for innovation are flip sides of the same coin. We must speculate to accumulate.

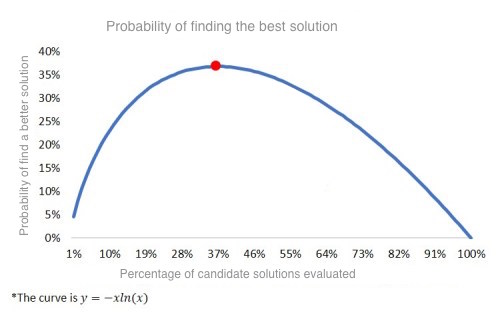

Each of our project iterations sets out to prove a hypothesis. If all hypotheses are proved correct, we have learned nothing. If all are proved wrong, we have wasted our time. There is an optimal success ratio that allows us to explore the largest number of possible solutions while simultaneously delivering the most functionality. We are seeking the best solution we can afford given the resources available to us. We are not seeking the absolute best solution, which is always beyond our budget, given the size of the solution space.

Just as the Secretary problem teaches us that we don't need to interview all the candidates for a job to hire one of the best. We don't have to explore every possible solution to find a "good enough" solution.

We should explicitly budget the amount of solution exploration we can afford for any initiative and focus on evaluating only the hypotheses that offer the highest possible return for the least risk of failure. As a rule of thumb, we typically aim for, 60% low or no risk iterations, and 40% higher risk iterations. This mix results in 80% of iterations delivering successfully and 20% failing or partially succeeding. Overall we waste as little as possible while still allowing ourselves to learn as we go and evolve the solution.

Even with the inevitable waste caused by speculation, we will incrementally accumulate benefits from prior iterations. As we do so, it will become increasingly difficult to revise or replace earlier contributions as they become embedded in the solution. Evolution can sometimes lead down a blind alley from which the only way out is to go back. If the cost of retracing our steps is high, we may be faced with a dilemma. Either accept that our future choices are constrained by the path we have already taken, or pay a high price by giving up the progress we have made so we can backup and take another, more beneficial, path. This situation is a classic example of negative evolutionary path dependency.

Fortunately, many software design best practices address this problem. Modularity of code, encapsulation through well defined APIs, and micro-services, to name a few, all make it easier to replace or refactor portions of a solution. All agile development teams will face this dilemma at some point; it is a consequence of the evolutionary approach. In these cases, we must compare two alternative approaches; press on with go back, and evaluate which delivers the better outcomes. Several factors must be evaluated.

The problem we face is to find the break-even point of the investment in removing the path dependency. If the benefit will directly contribute to the success of the initiative, and generate returns quickly, and can be achieved at an acceptable cost, then it may be worth it. If the benefit is indirect, or will only create returns in the distant future, or will come at a too high price, there is probably a better alternative.

The decision to remove a path dependency is an economic decision as much as an engineering decision. Such choices are never easy, many projects have sacrificed short-term success attempting to refactor or replace components that would return little, or no, benefit in a reasonable time-frame. We must guard our limited resources and spend them wisely. Wasting resources on poor investments, that return little benefit, must be avoided.